Deploy Deep Learning Models¶

TVM is capable of deploying models to a variety of different platforms. These how-tos describe how to prepapre and deploy models to many of the supported backends.

Deploy the Pretrained Model on Adreno™ with tvmc Interface

Deploy the Pretrained Model on Adreno™ with tvmc Interface

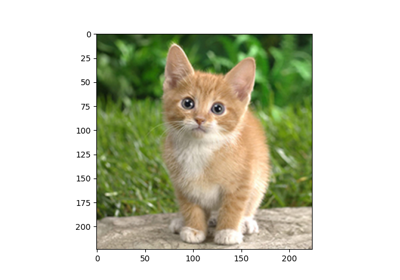

Deploy a Framework-prequantized Model with TVM - Part 3 (TFLite)

Deploy a Framework-prequantized Model with TVM - Part 3 (TFLite)