Note

Click here to download the full example code

Introduction¶

Authors: Jocelyn Shiue, Chris Hoge, Lianmin Zheng

Apache TVM is an open source machine learning compiler framework for CPUs, GPUs, and machine learning accelerators. It aims to enable machine learning engineers to optimize and run computations efficiently on any hardware backend. The purpose of this tutorial is to take a guided tour through all of the major features of TVM by defining and demonstrating key concepts. A new user should be able to work through the tutorial from start to finish and be able to operate TVM for automatic model optimization, while having a basic understanding of the TVM architecture and how it works.

An Overview of TVM and Model Optimization¶

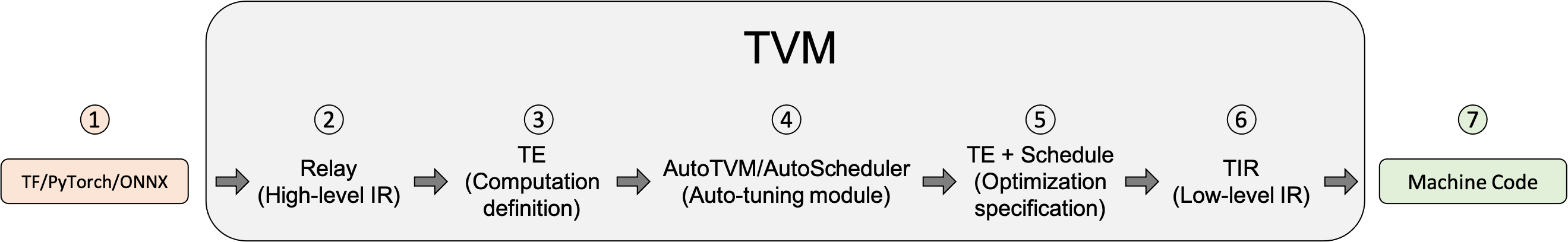

The diagram below illustrates the steps a machine model takes as it is transformed with the TVM optimizing compiler framework.

Import the model from a framework like Tensorflow, PyTorch, or Onnx. The importer layer is where TVM can ingest models from other frameworks, like Tensorflow, PyTorch, or ONNX. The level of support that TVM offers for each frontend varies as we are constantly improving the open source project. If you’re having issues importing your model into TVM, you may want to try converting it to ONNX.

Translate to Relay, TVM’s high-level model language. A model that has been imported into TVM is represented in Relay. Relay is a functional language and intermediate representation (IR) for neural networks. It has support for:

Traditional data flow-style representations

Functional-style scoping, let-binding which makes it a fully featured differentiable language

Ability to allow the user to mix the two programming styles

Relay applies graph-level optimization passes to optimize the model.

Lower to Tensor Expression (TE) representation. Lowering is when a higher-level representation is transformed into a lower-level representation. After applying the high-level optimizations, Relay runs FuseOps pass to partition the model into many small subgraphs and lowers the subgraphs to TE representation. Tensor Expression (TE) is a domain-specific language for describing tensor computations. TE also provides several schedule primitives to specify low-level loop optimizations, such as tiling, vectorization, parallelization, unrolling, and fusion. To aid in the process of converting Relay representation into TE representation, TVM includes a Tensor Operator Inventory (TOPI) that has pre-defined templates of common tensor operators (e.g., conv2d, transpose).

Search for the best schedule using the auto-tuning module AutoTVM or AutoScheduler. A schedule specifies the low-level loop optimizations for an operator or subgraph defined in TE. Auto-tuning modules search for the best schedule and compare them with cost models and on-device measurements. There are two auto-tuning modules in TVM.

AutoTVM: A template-based auto-tuning module. It runs search algorithms to find the best values for the tunable knobs in a user-defined template. For common operators, their templates are already provided in TOPI.

AutoScheduler (a.k.a. Ansor): A template-free auto-tuning module. It does not require pre-defined schedule templates. Instead, it generates the search space automatically by analyzing the computation definition. It then searches for the best schedule in the generated search space.

Choose the optimal configurations for model compilation. After tuning, the auto-tuning module generates tuning records in JSON format. This step picks the best schedule for each subgraph.

Lower to Tensor Intermediate Representation (TIR), TVM’s low-level intermediate representation. After selecting the optimal configurations based on the tuning step, each TE subgraph is lowered to TIR and be optimized by low-level optimization passes. Next, the optimized TIR is lowered to the target compiler of the hardware platform. This is the final code generation phase to produce an optimized model that can be deployed into production. TVM supports several different compiler backends including:

LLVM, which can target arbitrary microprocessor architecture including standard x86 and ARM processors, AMDGPU and NVPTX code generation, and any other platform supported by LLVM.

Specialized compilers, such as NVCC, NVIDIA’s compiler.

Embedded and specialized targets, which are implemented through TVM’s Bring Your Own Codegen (BYOC) framework.

Compile down to machine code. At the end of this process, the compiler-specific generated code can be lowered to machine code.

TVM can compile models down to a linkable object module, which can then be run with a lightweight TVM runtime that provides C APIs to dynamically load the model, and entry points for other languages such as Python and Rust. TVM can also build a bundled deployment in which the runtime is combined with the model in a single package.

The remainder of the tutorial will cover these aspects of TVM in more detail.